Evaluating model generalization for cow detection in free-stall barn settings: Insights from the COw LOcalization (COLO) dataset

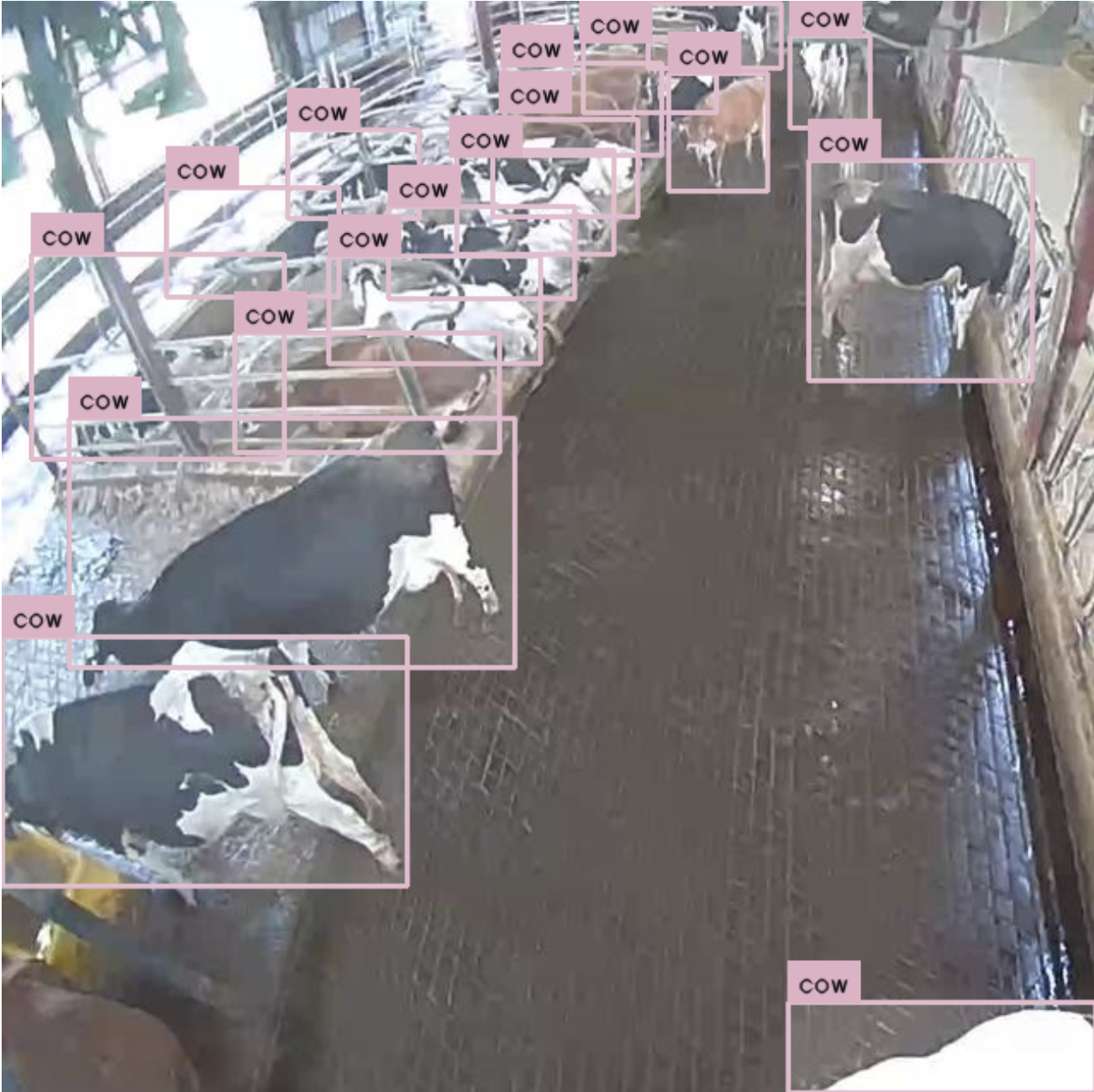

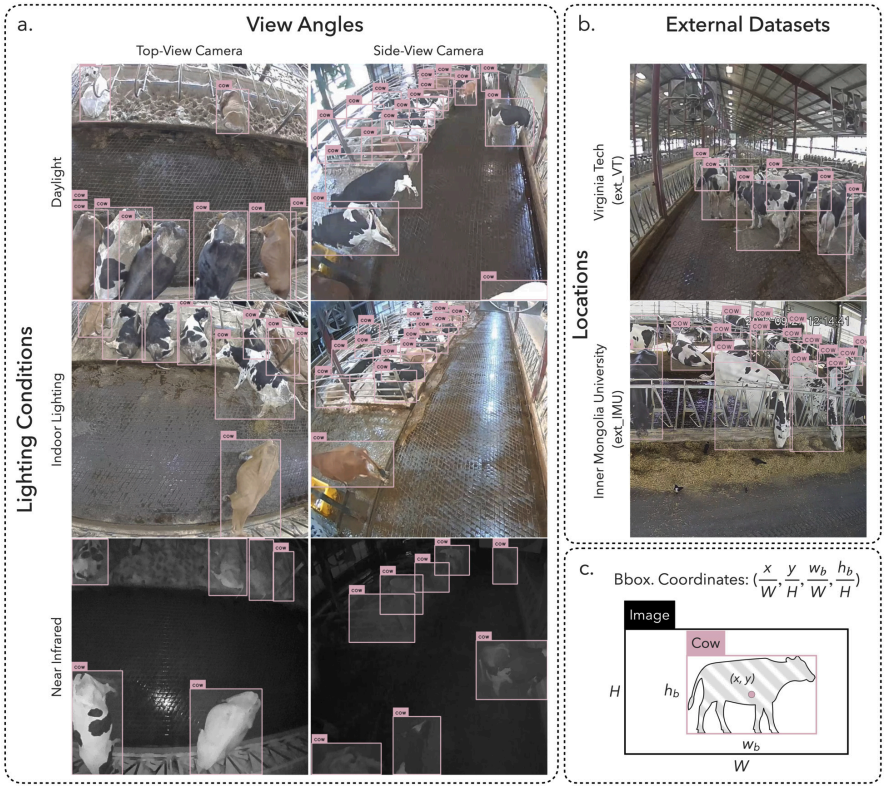

Diverse Environmental Conditions for Robust Benchmarking

To rigorously examine model generalization, the study utilizes a dataset capturing the same herd under widely varying conditions, including distinct camera perspectives (top-down vs. side-view) and diverse lighting scenarios ranging from daylight to near-infrared. This diversity allows researchers to quantify how significantly specific environmental shifts, such as changing a camera's angle, impact a model's ability to detect cows compared to changes in illumination.

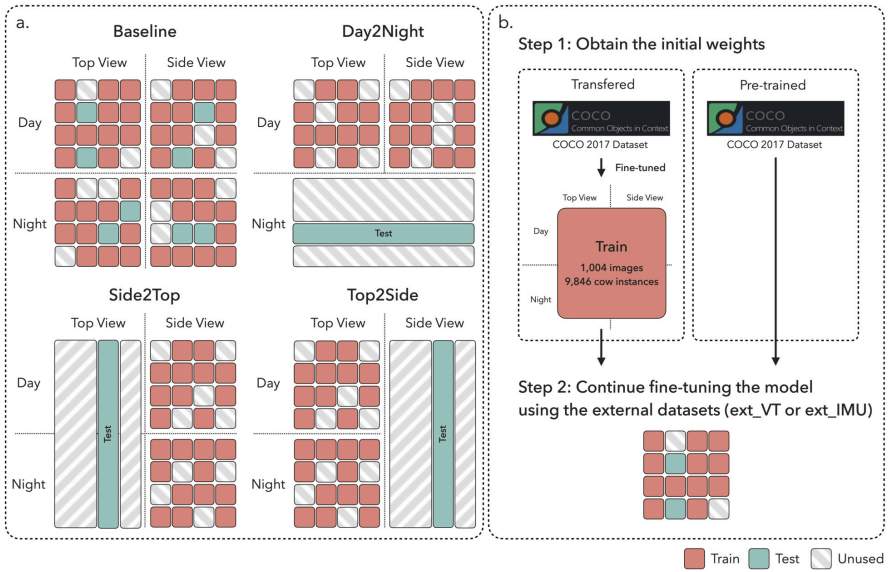

Investigating Data Distribution Shifts and Weight Initialization

The research employs a rigorous cross-validation design to investigate generalization capabilities, testing models trained on one data distribution (e.g., top-view or daylight) against unseen distributions (e.g., side-view or night). Additionally, the study evaluates the impact of initial model weights by comparing the performance of models initialized with task-specific weights (fine-tuned on cow data) versus those using general pre-trained weights (from the COCO dataset) when applied to external environments.

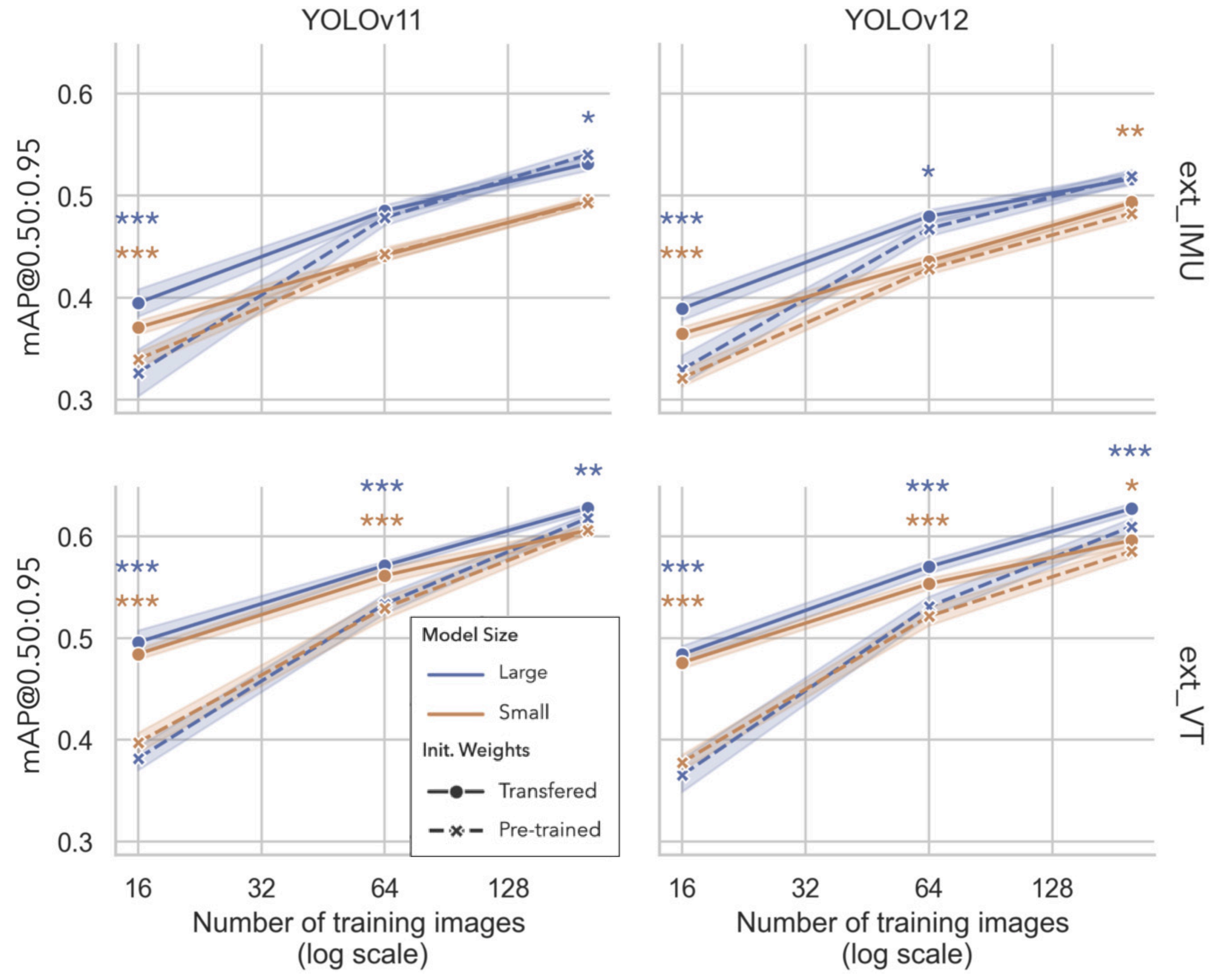

Complexity and Transfer Learning Do Not Guarantee Performance

Contrary to the assumption that larger models and specialized transfer learning always yield better results, the findings show that smaller model variants can outperform larger ones in challenging, heterogeneous tasks like cross-angle detection. Furthermore, the advantage of using transferred weights diminishes significantly when the target environment differs substantially from the source, suggesting that general pre-trained weights are often sufficient and more efficient for diverse deployment scenarios.