VTag: a semi-supervised pipeline for tracking pig activity with a single top-view camera

Automated 'Label-Free' Initialization via Motion

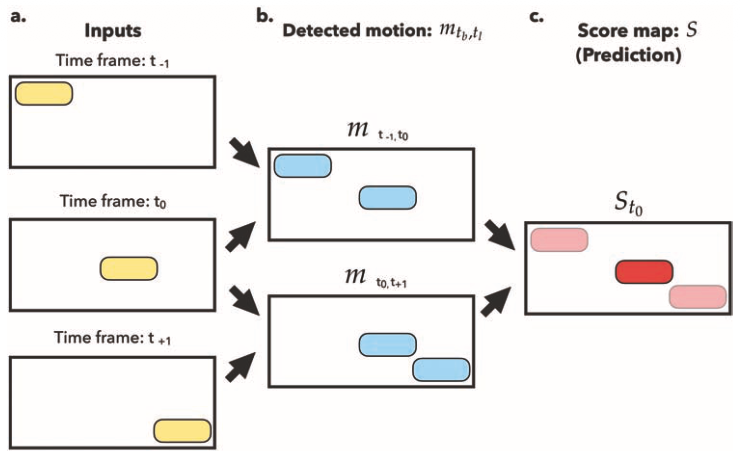

To overcome the need for annotating thousands of images to train the system, VTag utilizes a statistical motion detection approach to locate animals automatically. As illustrated in the figure, the algorithm calculates pixel variations across neighboring time frames to generate a 'score map', identifying high-motion areas as Pixels of Interest (POI). This allows the software to propose initial tracking coordinates based purely on movement dynamics, effectively bypassing the supervised learning requirement of teaching the computer what a pig looks like.

Deriving Social Interaction Metrics

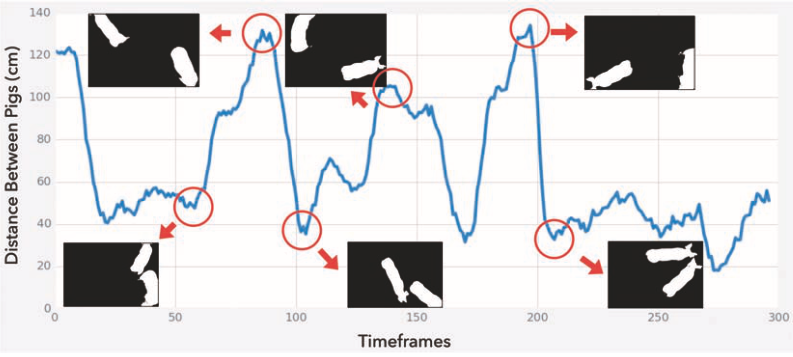

Beyond simple location tracking, VTag provides actionable behavioral insights by continuously monitoring the relative distance between animals to infer social interactions. The figure visualizes how these distance metrics correlate with real-world behaviors: 'valley' values (low distance) identify moments of active engagement, such as chasing or feeding together, while 'peak' values (high distance) indicate periods where the animals are separated in different corners of the pen.

Accessibility via Graphical User Interface

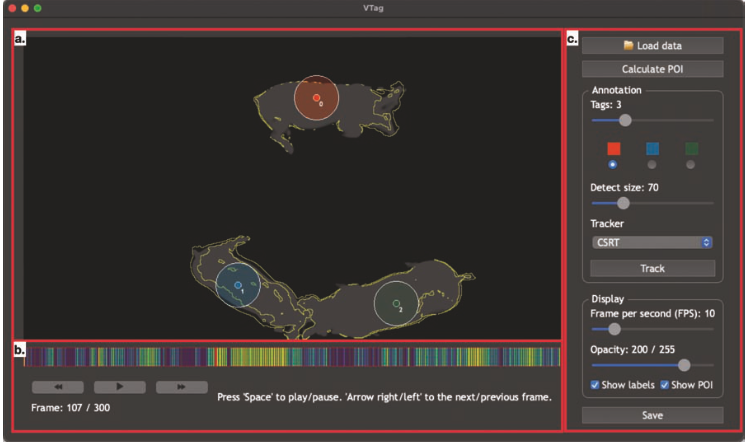

To ensure the technology is accessible to farmers and researchers without programming expertise, VTag is packaged as a software tool with a user-friendly Graphical User Interface (GUI). It demonstrates the interface's capabilities, allowing users to visualize tracking overlays in real-time, interactively adjust parameters, and manually correct positions if the tracker drifts, facilitating a true semi-supervised workflow.